Appliances for science are overpriced. Massively. One of those things are heating mats which don't do much other than take the temperature, compare it to the set point and regulate the heat output accordingly. This might sound complicated, but the controller units doing just that have been around for a long time and hence are not very expensive. Certainly not as expensive as companies try to make us believe. Labrigger has a post on DIY heating pads made from industrial parts as made by Taro Ishikawa which I found very interesting and ingenious. And so I embarked on a little adventure and made one for myself.

Things did turn out to be a little tricky at times especially due to my rather rusty knowledge of circuits. Luckily a friend from a collaborating group was happy to explain even the most basic principles of electric circuits with great patience and assisted me as I put everything together. I've bought all my parts from RS Components, in many ways the british equivalent of McCaster-Carr mentioned in the original post.

First off, the idea: What we want is a system that keeps the mouse at a certain temperature, say 38°C. In more technical terms we can say that we have a set point of 38°C and a measured variable (i.e. body temperature) that we can manipulate so it stays at the set point. We manipulate it by changing the temperature of the heating mat. Physiologists will probably think of a simple negative feedback mechansim here, and that's exactly what it is. This could be done manually: just get a thermometer and a heating mat with adjustable heat-output and regulate the heat up or down depending on the body temperature. In fact, many companies sell exactly that kind of system for hundreds of pounds (if not thousands). A better system would be to have a controller that regulates heat-output automatically so we can concentrate on whatever we are doing (surgery for example). The latter is the kind of system I've been working on.

The principal components of the system are:

PID Controller (Control Unit)

In industry, control units that keep a variable at a certain setpoint have been around for a long time, the most abundant of which are PID controllers. I've bought one with a linear DC output (0-10V) as opposed to most standard models wich come with relays only (RS 701-2788). This would give me the necessary fine-control for a heating mat.

|

| What a beauty. Here you can see it with the mains supply connected. |

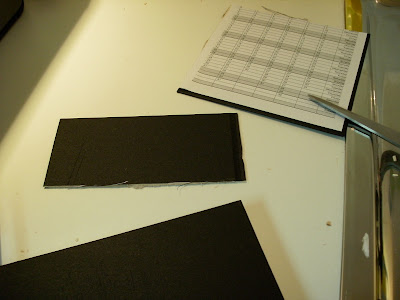

The heating mat is a rather simple thing, it turns electricity into heat. Wow.

Also bought from RS (245-528), 50x100mm with a maximum of 5W output at 12V (DC of course). Maximum temperature: 200°C - much more than we need.

Thermocouple (sensor)

We use a thermocouple as our temperature indicator. The working principle here is that it will change its voltage depending on the temperature between two conductors. All we need to know is that there are different types and that we need to buy one that our PID controller can read (in our case it can read most types).

In the pictures below you can see a K-type thermocouple, but a T-type probe suitable for mice has been ordered and can easily be replaced.

Non-Inverting Amplifier

The output on our PID controller is 0-10V maximum, but we want 12V. I realise I could probably get away with just using the 0-10V range, but I don't like operating electrical components at their ouput limits. Therefore I put together a simple non-inverting amplifier. The central component is an operational amplifier (RS 652-5678):

|

| The wiring diagram for the opamp. |

Building Notes

Here is a quick documentation of the building process:

|

| First, connecting the mains. Care has been taken to not expose the contacts too much. |

|

| It's alive! |

|

| The connector on the bottom left is the thermocouple. The two green wires are + and - of the linear output. |

|

| Just to double check, I connected the PID controller to my PC via a NI DAQ and read the linear output. What you see are slow ramps as I increase and decrease the set-point. Clearly, this signal needs some cleaning up, so I put bypass capacitors and a RC low-pass filter between the PID and the opamp. Yes, I took a photo of my screen instead of taking a screenshot. Lazy logic. |

|

| Here is a total of the circuit. It's really just a basic non-inverting amplifier with the aforementioned components. The black heatsink in the middle is required to keep the opamp from overheating. |

|

| Here you can see the thermocouple taped to the heating mat on the right, and the PID controller on the left. |

|

| I also used an oscilloscope (left) to monitor either the output of the PID controller or the amplifier which helped keeping an eye on voltages in the system and finding mistakes. |

Next Steps

The current system allows us to keep the heating mat at a certain temperature which is already quite handy. I will go on and tune this system so I can use it in combination with a thermocouple that takes the mouse body temperature directly and regulates the heating mat accordingly. I had no idea how much science has gone into tuning-algorithms for PID controllers (there are actual patents on some), but with a lot of care this should be doable. Bear in mind that we won't use this heating mat around other electrically sensitive equipment, so no precautions to shield mains noise have been taken (yet). Also, I will soldier all this onto a stripboard and box it up so it looks nice and is safe when used with animals, expect an update after christmas.